An introduction to SIMD and ISPC in Rust

An introduction to SIMD and ISPC in Rust

We don't use our hardware effectively

Single instruction, multiple data (SIMD) allows for your CPU to operate on multiple bits of data per cycle. SIMD isn't easy but it is endlessly fascinating. There's an odd sense of joy gained from peering high level to low through the lens of the compiler and raw assembly. It's the perfect knife edge between awe and expletives.

Thanks to autovectorization compilers can give you many of the benefits of SIMD with little work. Sadly that's not guaranteed. As noted by Lucas Meijer discussing the dangers of relying on autovectorization by the compiler:

I should be able to say “if this loop for some reason doesn’t vectorize, that should be a compiler error, not a ‘oh code is now just 8x slower but it still produces correct values, no biggy!’”

A tiny change in your code can destroy autovectorization with zero warning. We'll see below that tiny inefficiencies in your code can steal an enormous amount of performance.

No matter how smart compilers get they'll likely never be able to perfectly optimize complex cases. Many times such optimizations require rethinking and recomposing the problem itself, not just tweaking a few lines of code.

Leaving those cases unoptimized can be tragic. Examples exist of stupendous speedups across a variety of tasks. Fast compression, ripgrep, roaring bitmaps that sit at the base of nearly every search engine, parsing JSON, vectorized emulation of 16 32-bit VMs per AVX-512 thread, ... Our hardware is capable of so much more and we can unlock it.

If we're particularly smart we can decompose a large complex problem into a tiny one and only spend our time hand optimizing that minimal use case. An example of this is BLIS:

BLIS is a portable software framework for instantiating high-performance BLAS-like dense linear algebra libraries. The framework was designed to isolate essential kernels of computation that, when optimized, immediately enable optimized implementations of most of its commonly used and computationally intensive operations.

Even separate to all this, and if theoretically we were doomed to be beaten by the compiler forever, it's still worth our time to investigate what's happening under the covers.

At worst you'll come away with a better understanding of how we're standing on the shoulders of giants. At best you may realize the giants were asleep this whole time and that standing on their shoulders works best when they're standing themselves.

In this post we'll use a simple example to pull apart some of the complexities and possibilities of SIMD.

Task: pseudo dot product

The dot product between two vectors is z[i] = x[i] * y[i], or \(z_i = \sum_i x_i y_i \) if you prefer the mathematical notation.

We'll be performing a pseudo dot product, specifically z[i] += x[i] * y[i], for two reasons: first, the compiler is too smart and will remove all our redundant loops as they're not used, and second, we want to demonstrate the fused multiply add (FMA) SIMD intrinsic.

Benchmark

For the benchmark we'll be performing a tight loop of pseudo dot products on 1024 dimensional vectors 10 million times. I'll be using an Intel Core i7-7700k CPU which supports various SIMD instruction sets up to and including AVX-2 instructions.

All code will be compiled and benchmarked using RUSTFLAGS="-C target-cpu=native", which essentially says "optimize for the CPU on this machine", and cargo run --release (release builds) to perform builds with full optimization.

For analyzing the resulting assembly we'll be using cargo asm, Linux's perf, and Intel's vTune.

For the final results we'll be using the average runtime determined by hyperfine, a command-line benchmarking tool available from Rust's Cargo.

Version 1: Naive Rust and naive C

Naive C:

void dotp(float *array1, float *array2, float *dest, size_t N) {

for (size_t i = 0; i < N; ++i) {

dest[i] += array1[i] * array2[i];

}

}

Naive Rust:

fn dotp(x: &[f32], y: &[f32], z: &mut [f32]) {

for ((a, b), c) in x.iter().zip(y.iter()).zip(z.iter_mut()) {

*c += a * b;

//*c = a.mul_add(*b, *c);

}

}

With Rust we have the option to use fused multiply add (FMA) to collapse (vmulps, vaddps) into a single instruction (vfmadd213ps) by using a.mul_add(b, c). This can improve performance as well as improve accuracy as the former uses two rounding operations, one per operation, whilst the latter only uses one. This optimization is not done by default by Rust for reproducibility reasons. In our case there is no substantial runtime difference in performance.

Results:

- Naive C: 809.9 ms ± 7.3 ms

- Naive Rust (

c += a * b): 890.4 ms ± 11.8 ms - Naive Rust (

mul_add): 894.2 ms ± 17.4 ms

Analysis using cargo asm

Analysis using perf

Version 2: Rust SIMD intrinsics with and without inline

#[inline(never)]

fn simddotp(x: &[f32], y: &[f32], z: &mut [f32]) {

for ((a, b), c) in x

.chunks_exact(8)

.zip(y.chunks_exact(8))

.zip(z.chunks_exact_mut(8))

{

unsafe {

let x_a = _mm256_loadu_ps(a.as_ptr());

let y_a = _mm256_loadu_ps(b.as_ptr());

let r_a = _mm256_loadu_ps(c.as_ptr());

_mm256_storeu_ps(c.as_mut_ptr(), _mm256_fmadd_ps(x_a, y_a, r_a));

}

}

}

Here we step over our vector eight floats at a time (chunks_exact(8)) and use the load (_mm256_loadu_ps), fused multiply add (_mm256_fmadd_ps), and store (_mm256_storeu_ps) intrinsics. Note these are all unsafe instructions as we're dealing with pointers.

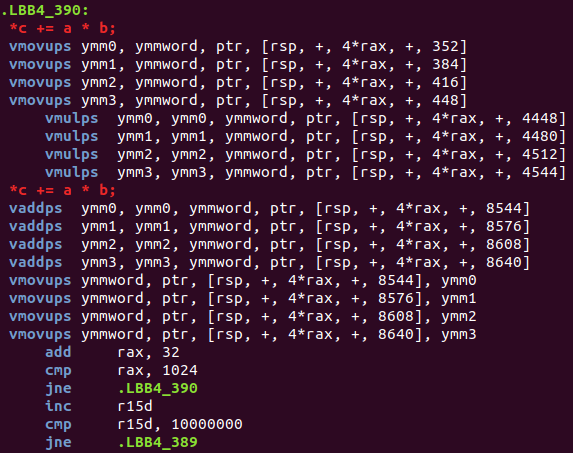

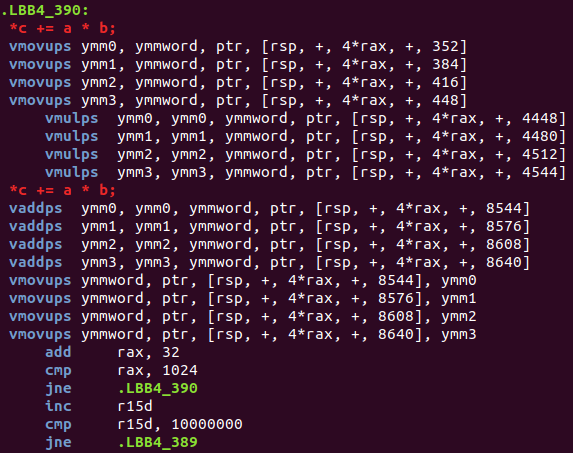

Inlining allows for re-ordering of instructions as long as those instructions don't interfere with each other. In our case this appears to cause issues, likely stalls, as blocks of up to eight moves (vmovups) are scheduled in one go. With so many loads we don't take advantage of the ability to load and multiply at the same time. By turning off inlining we're able to prevent that and the given instructions (move, move, mul + move, save) are instead unrolled four times.

An interesting aside: Rust may prevent certain code from being inlined if it contains SIMD intrinsics as those SIMD intrinsics might not be supported for all the hardware the binary is intended for.

Results:

- Naive C: 809.9 ms ± 7.3 ms

- Naive Rust (

c += a * b): 890.4 ms ± 11.8 ms - Naive Rust (

mul_add): 894.2 ms ± 17.4 ms - Rust SIMD (inline allowed): 887.4 ms ± 7.5 ms

- Rust SIMD (never inline): 734.4 ms ± 13.6 ms

Analysis using perf

Version 3: Rust-ISPC and Rust without bounds checking

What is ISPC?

The Intel Implicit SPMD Program Compiler (ISPC) is a compiler for a variant of the C programming language highly optimized for SIMD CPU architectures. Programs appear far closer to shaders or GPU code (OpenCL / CUDA) than traditional C.

If you're interested I strongly recommend reading The story of ISPC memoir, a multi-part series about how the compiler came to be.

export void dotp(uniform const float X[], uniform const float Y[], uniform float Z[], uniform const uint N) {

foreach(i = 0 ... 1024) {

Z[i] += X[i] * Y[i];

}

}

If you squint the code is essentially C and, when deviating, pulling almost directly from the ISPC Performance Guide .

You might think that adding another compiler to the project would be a massive headache but luckily the rust-ispc crate comes to our rescue. The library is even split into two crates - a compile and runtime crate - such that an end user won't need ISPC if they aren't modifying the ISPC code. The ispc-rust crate handles compilation, generating bindings, and linking all for you with only a half dozen lines of code.

Rust: To try to get the exact same assembly as ISPC produces, we need to simplify our Rust version. The Rust code above doesn't assume we'll always be receiving vectors of length 1024 and so produces code that could handle varying lengths. We'll instead use a for loop instead of iterators and avoid bounds checking.

Analysis using Intel vTune

Final tally

- Naive C: 809.9 ms ± 7.3 ms

- Naive Rust (

c += a * b): 890.4 ms ± 11.8 ms - Naive Rust (

mul_add): 894.2 ms ± 17.4 ms - Rust SIMD (inline allowed): 887.4 ms ± 7.5 ms

- Rust SIMD (never inline): 734.4 ms ± 13.6 ms

- Rust SIMD (never inline + unrolled): 728.2 ms ± 17.7 ms

- Rust ISPC: 642.9 ms ± 62.3 ms

The most surprising result is the difference between the non-inlined Rust SIMD and the Rust ISPC. Both share highly similar resulting assembly and yet minor differences enable the ISPC version to perform far better.

Intel's vTune confirms this by showing the number of back-end bound operations (i.e. stalled due to waiting on dependencies (slow loads)) is noticeably higher for the Rust SIMD version than for ISPC. At this stage it's still a mystery to me.

Conclusion

With only a few lines of code we're able to achieve a near 30% speedup compared to the initial case with either hand rolled SIMD intrinsics or rust-ispc.